Split Learning and Inference

Split learning removes barriers for collaboration in a whole range of sectors including healthcare, finance, security, logistics, governance, operations and manufacturing.

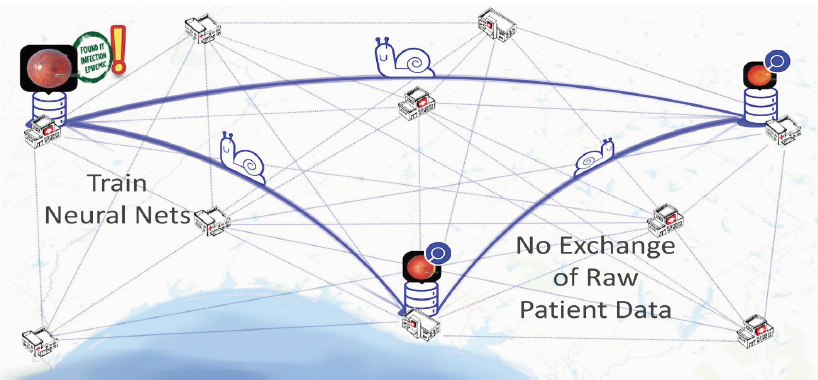

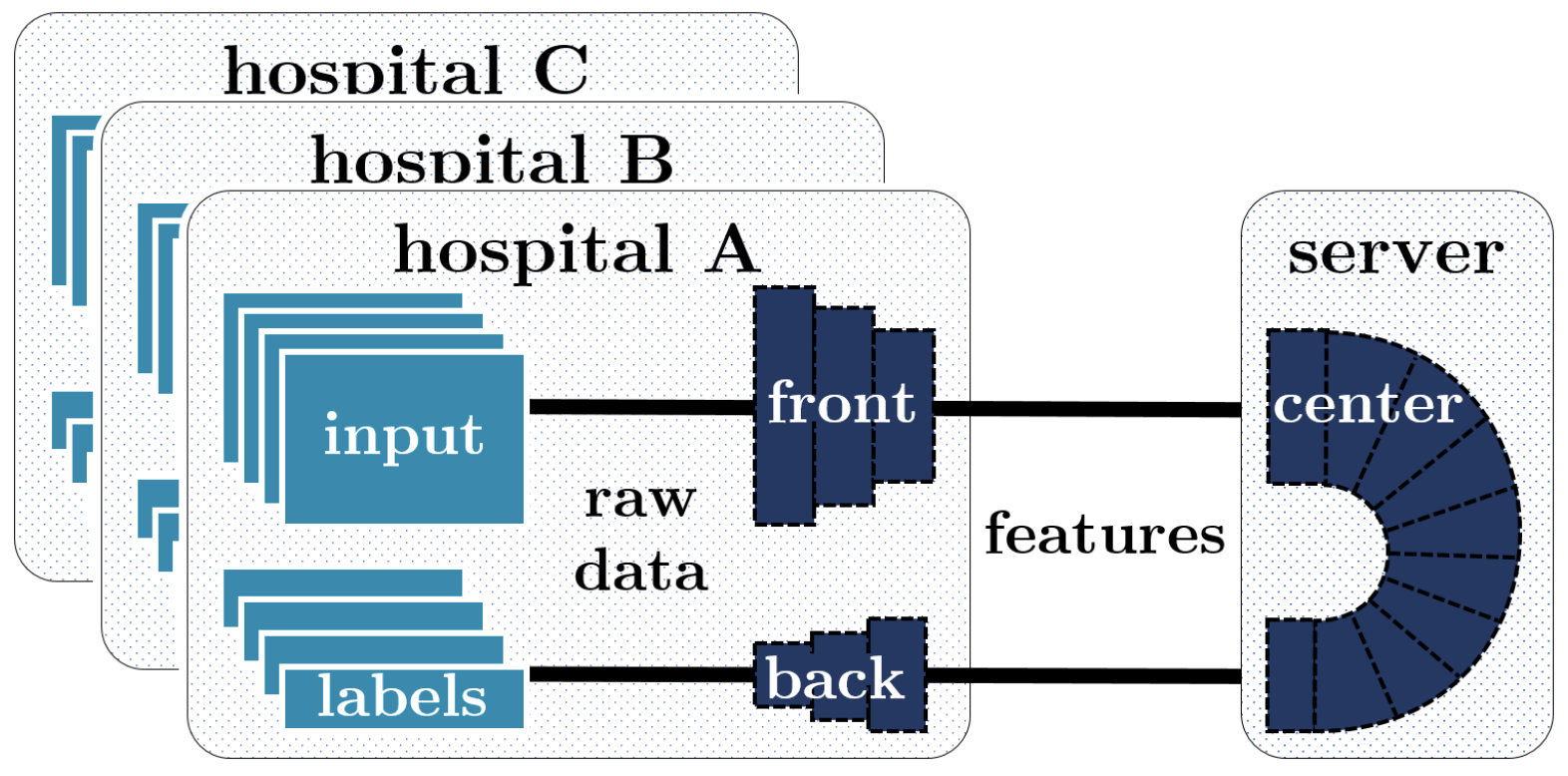

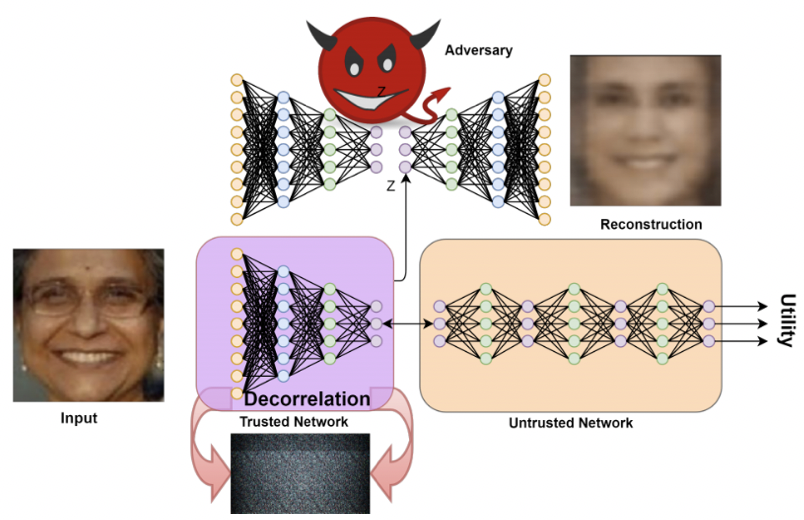

For example, a split learning configuration as shown below allows for resource-constrained local hospitals with smaller individual datasets to collaborate and build a machine learning model that offers superior healthcare diagnostics, without sharing any raw data across each other as necessitated by trust, regulation and privacy.

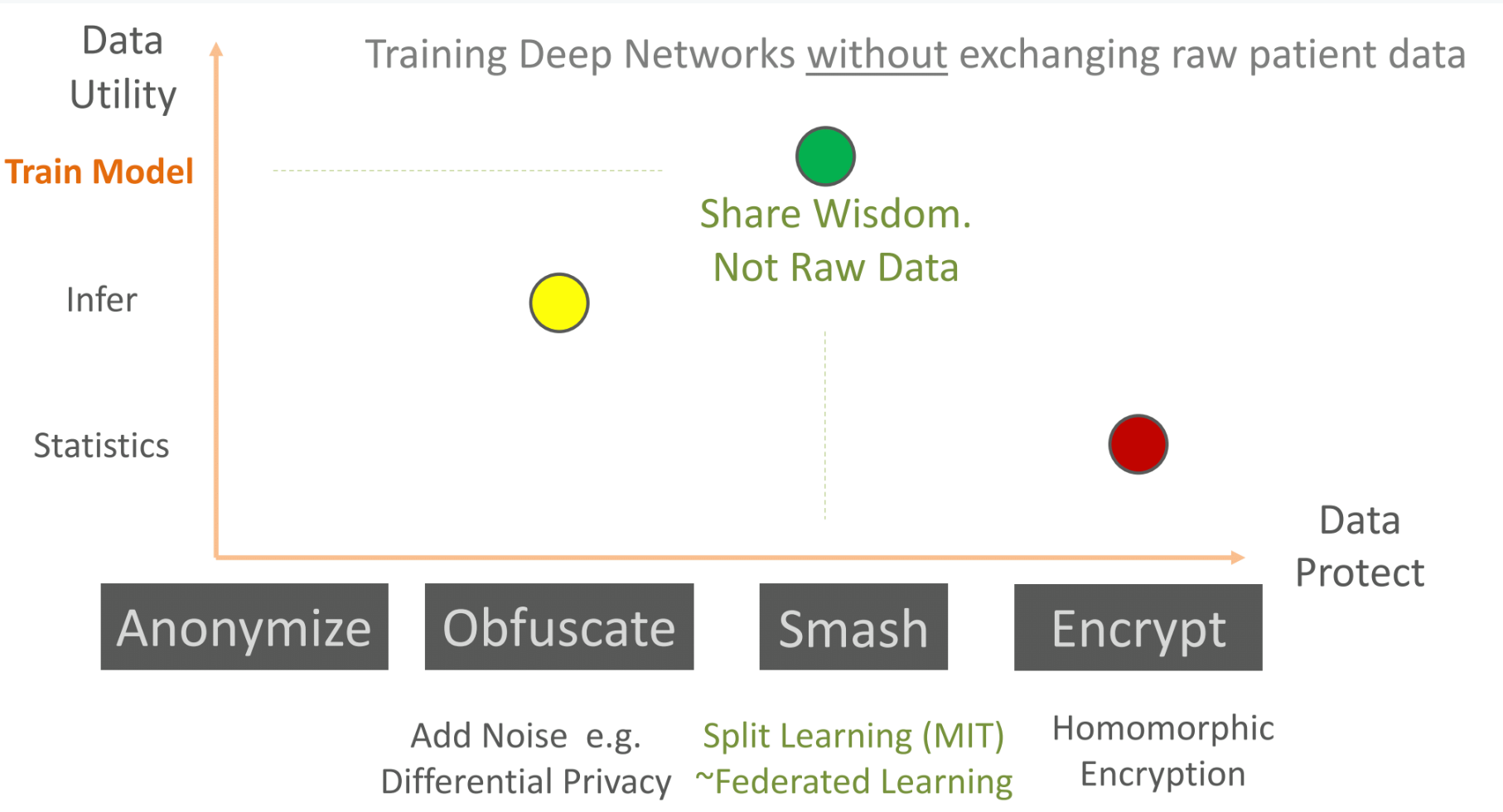

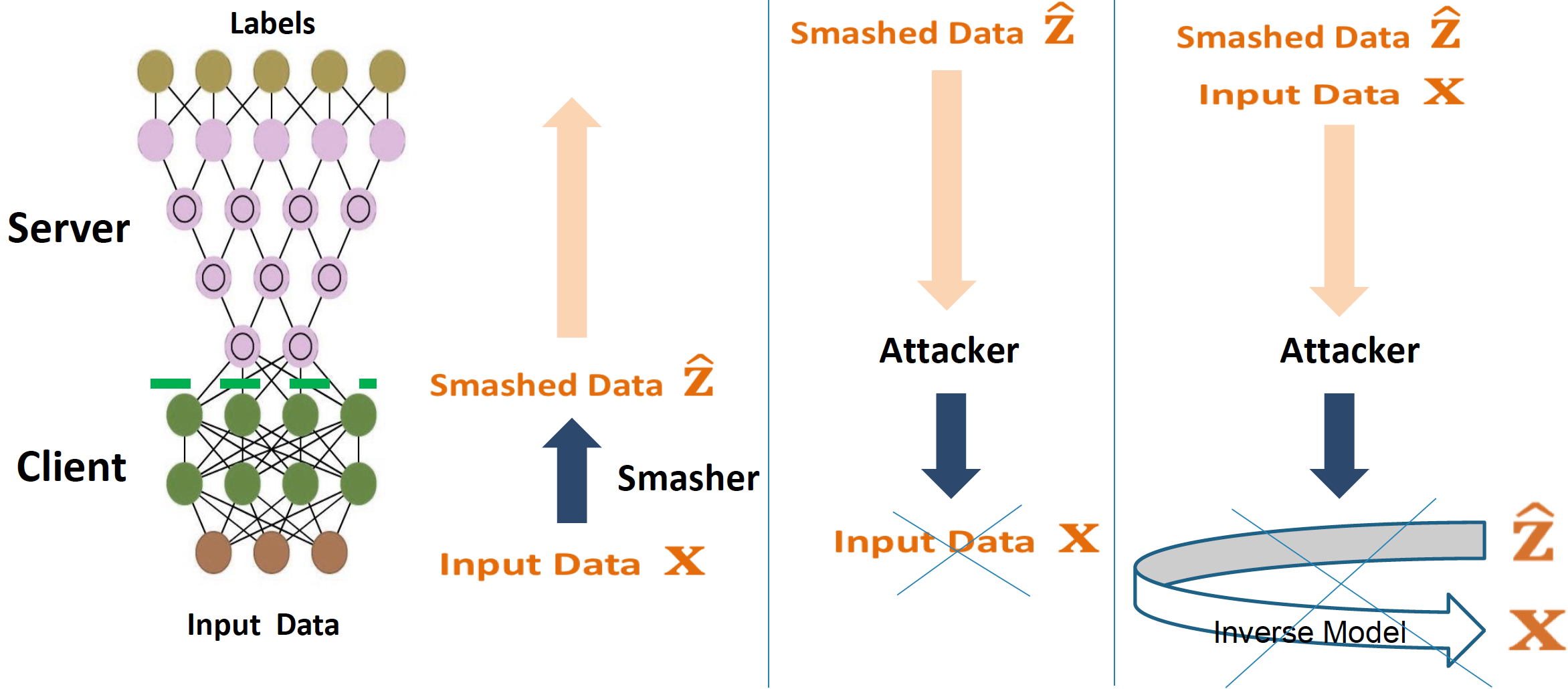

Landscape of related work: As shown below, split learning ideally fills the gap for being able to perform advanced AI tasks like training machine learning models in distributed settings with a substantial level of data protection.

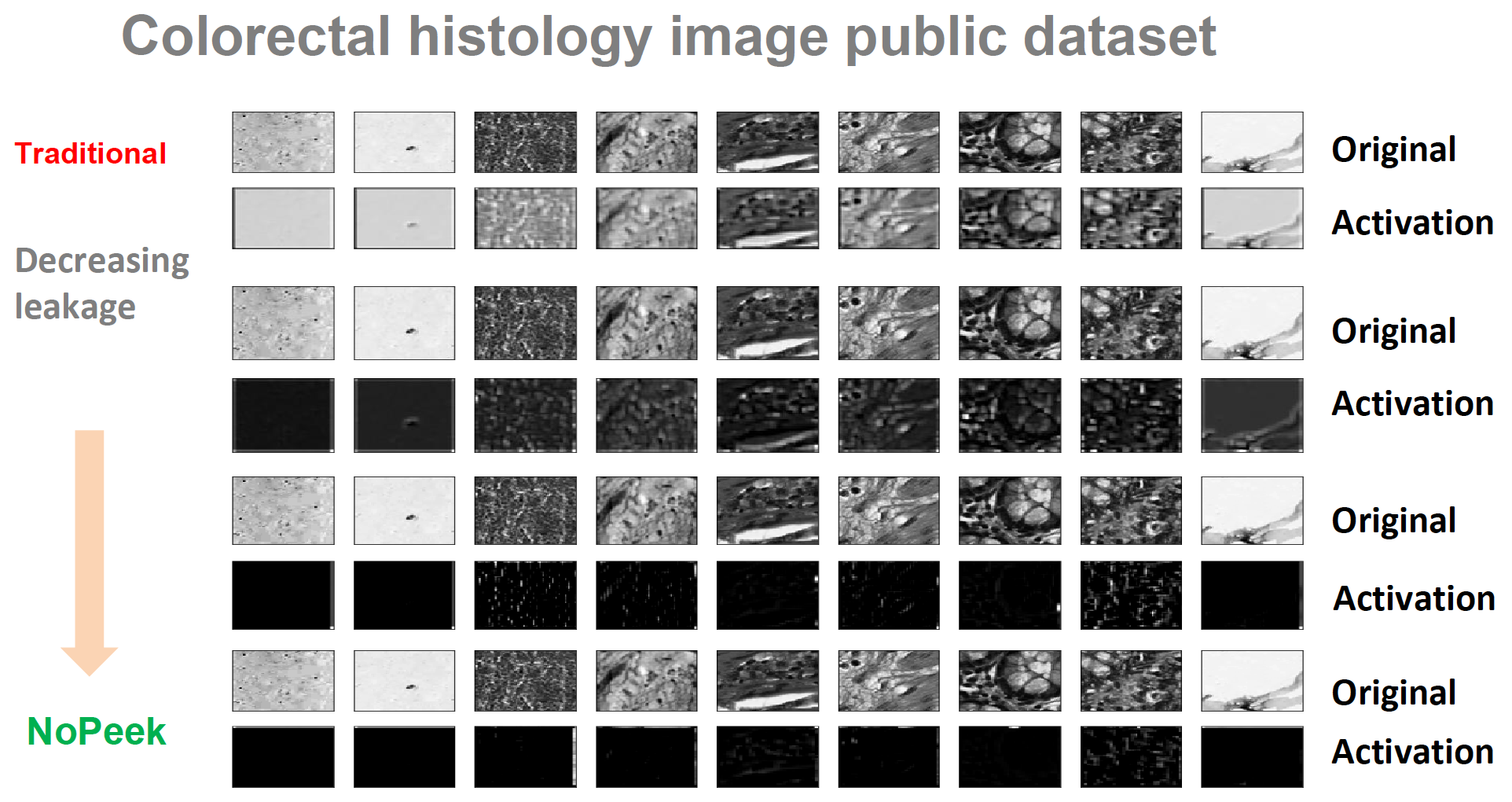

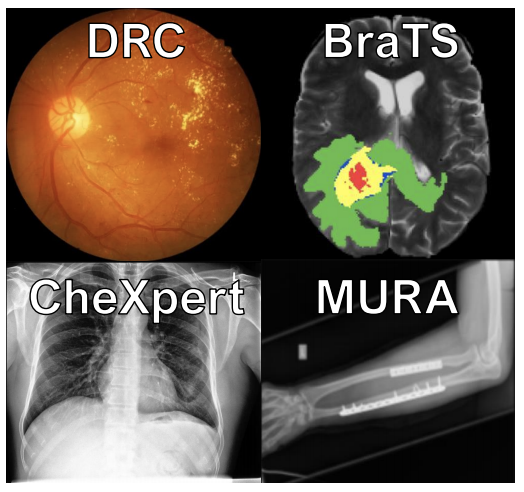

SplitNN Architectures, Leakage Prevention and Diverse Applications

Efficiency

Split learning’s computational and communication efficiency on clients

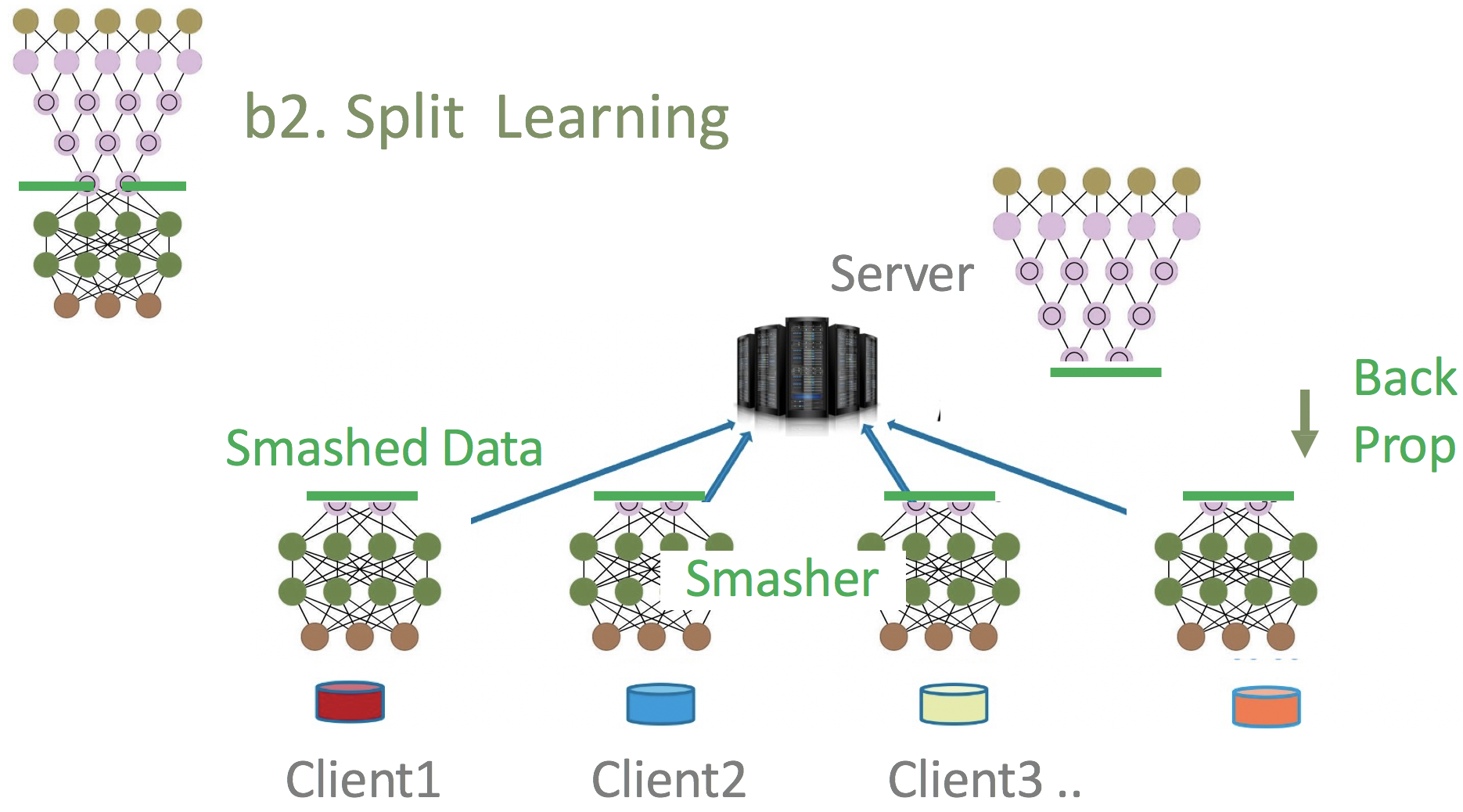

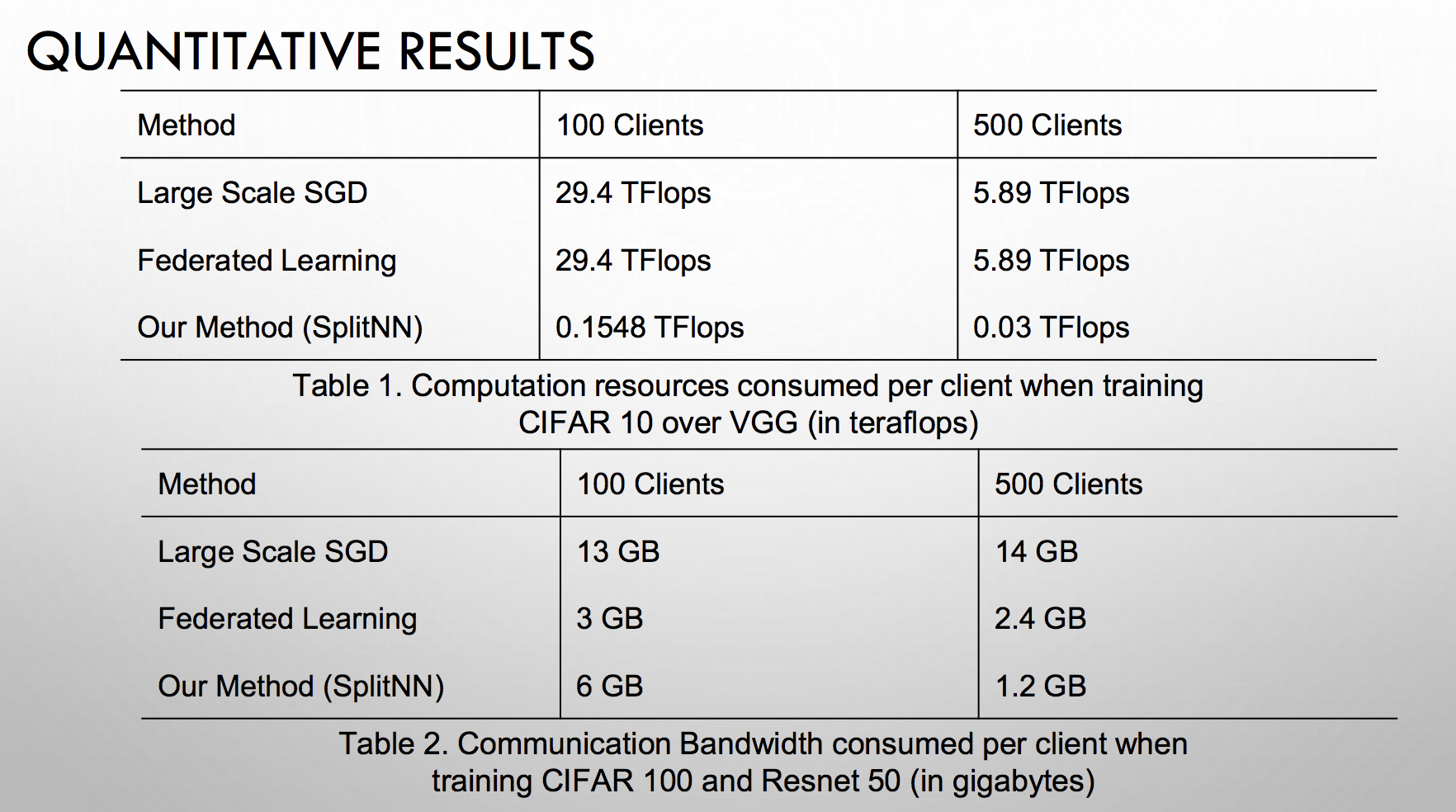

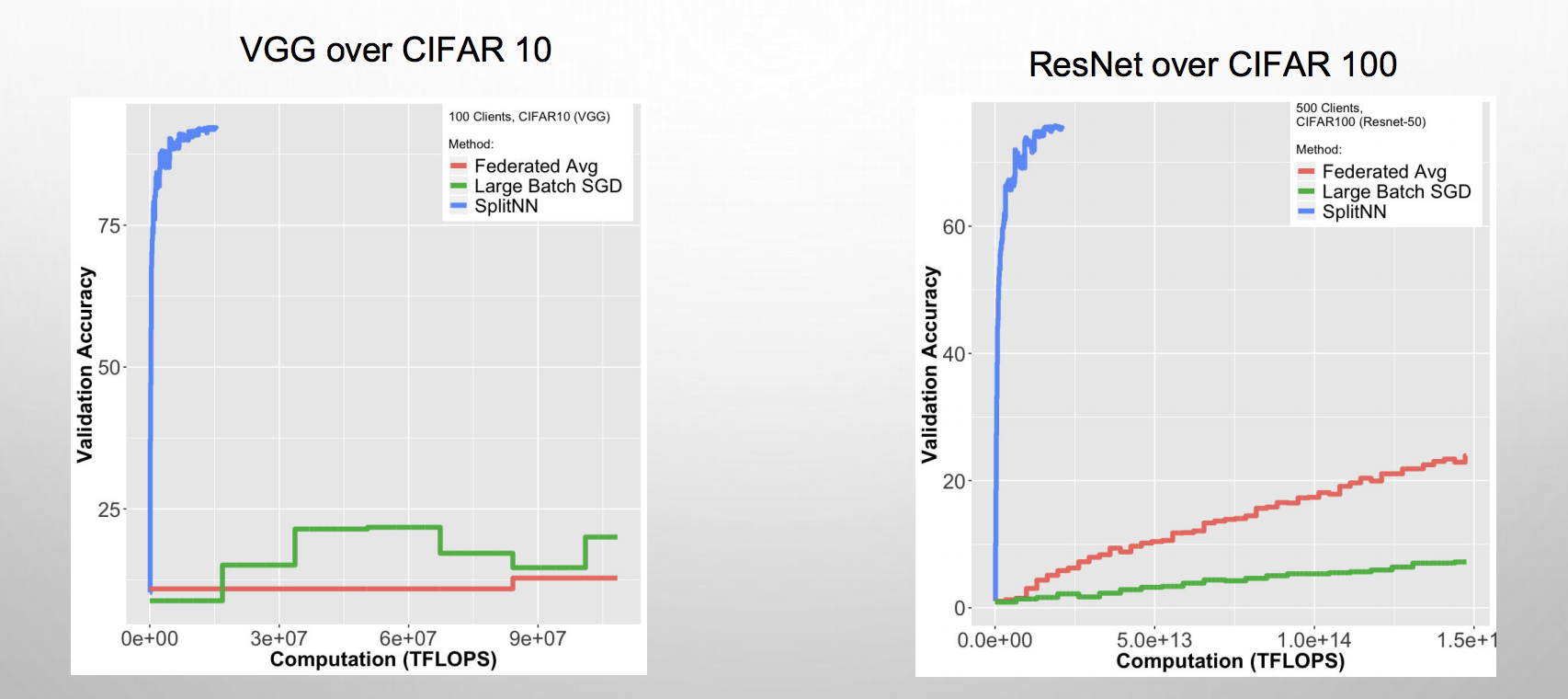

Client-side communication costs are significantly reduced as the data to be transmitted is restricted to initial layers of the split learning network (splitNN) prior to the split. The client-side computation costs of learning the weights of the network are also significantly reduced for the same reason. In terms of model performance, the accuracies of Split NN remained competitive to other distributed deep learning methods like federated learning and large batch synchronous SGD with a drastically smaller client side computational burden when training on a larger number of clients as shown below in terms of teraflops of computation and gigabytes of communication when split learning is used to train Resnet and VGG architectures over 100 and 500 clients with CIFAR 10 and CIFAR 100 datasets.

Versatile plug-and-play configurations of split learning

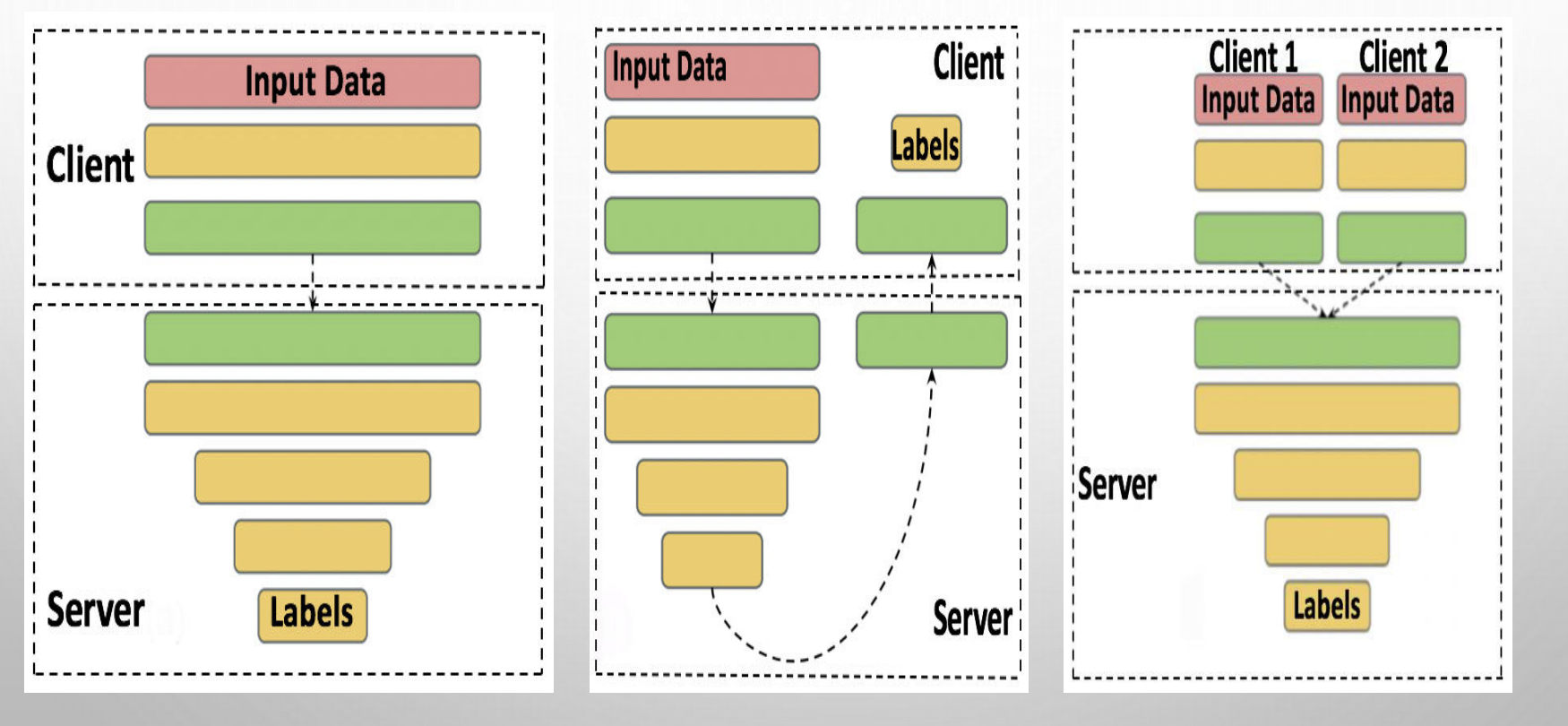

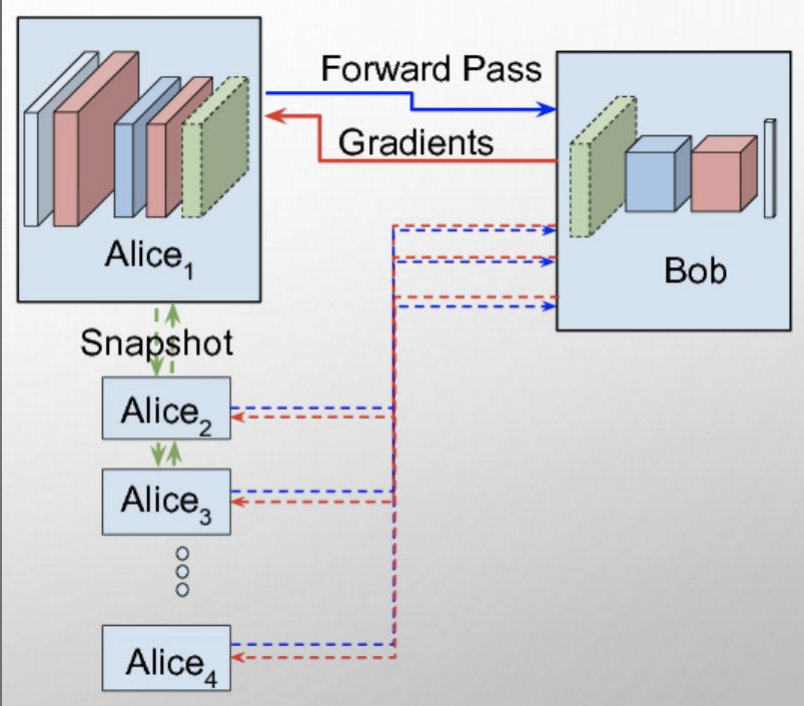

Versatile configurations of split learning configurations cater to various practical settings of i) multiple entities holding different modalities of patient data, ii) centralized and local health entities collaborating on multiple tasks, iii) learning without sharing labels, iv) multi-task split learning, v) multi-hop split learning and other hybrid possibilities to name a few as shown below and further detailed in our paper here (PDF).